Something About Mainframe

Mainframe – a term often heard when movie protagonists talk of a platform where tons of data, especially sensitive ones, are stored and secured. It creates an impression of big machines whirring 24 hours a day, 7 days a week, all year-round, processing information for government, business, manufacturing, and other major industries.

The name comes from the way the machine is build up: all units (processing, communication etc.) were hung into a frame. Thus, the main computer is built into a frame, hence, Mainframe.But how did mainframe came into being? How did it evolve in history? Who were the pioneers, and what companies were, and still are, involved?

History of Mainframe Computing

Prior to the mainframes that we have today, the quest for advancement in data and information processing started with the building of ENIAC (Electronic Numerical Integrator and Calculator) in 1942.It was then followed by Mark I, BINAC, Whirlwind and UNIVAC (Universal Automatic Computer), delivered to the US Census Bureau in 1951 and considered to be the first mass-produced computer. Its central complex was about the size of a one-car garage (14 feet by 8 feet by 8.5 feet high) and has to be incessantly cooled by a high-capacity chilled water and blower air conditioning system as its vacuum tubes generated an enormous amount of heat.

It was after UNIVAC when IBM entered the picture in 1952 introducing their 1st generation of mainframe – the IBM 701, the first in its pioneering line of IBM 700 series computers. Devised in one of its laboratories, Columbia University considered it to be the first large-scale commercially available computer system for solving complex scientific, engineering and business problems. Sometimes referred to as “Defense Calculator”, it increased the number of addition and subtraction per second from 11,000 to 16,000 per second.

In 1959, IBM upped the ante by launching its 2nd generation of computers with the introduction ofIBM 1401 – an all-transistorized data processing system that placed the features found in electronic data processing systems at the disposal of smaller businesses.

Not long after, in 1964, IBM changed the landscape with its risky and bold move of implementing its 3rd generation of computers – the System/360. It was a product of IBM’s $5B investment to adopt a new architecture specifically designed both for data processing and to be compatible across a wide range of performance levels. And as they say, the rest is history, with the enhancement of virtual storage in the System/370upgrade in the 1970s, the Y2K- compliant OS/390 in the 1990’s up to the zSeries that we know today.

Origin of COBOL

Parallel to advancing the features of mainframe in the 1950’s was the desire to come up with a programming language that is “common” or compatible among a significant group of manufacturers.Joint effort by the computing industry, major universities and the US government, through the Conference on Data Systems Languages (CODASYL), gave rise to COBOL in 1959. COBOL was developed within a six-month period, designed for developing business applications that are typically file-oriented and is not designed for writing system programs nor for handling scientific applications.

Although COBOL is simple, portable and maintainable, it is very wordy in nature and has a highly rigid format, requiring major COBOL programs to typically contain more than 1,000,000 lines of code. Even though huge investment was made in building these 1,000,000+ lines COBOL applications, they continue to be long-lived and are still the “backbone” of the IT infrastructure of many financial institutions today. According to a report from Gartner group, it was estimated in 1997 that there were about 300 billion lines of computer code in use in the world. Of that they estimated that about 80% (240 billion lines) were in COBOL and 20% (60 billion lines) were written in all the other computer languages combined.

Mainframe into Oblivion?

In spite of its popularity and wide base of users and with the advent of newer technologies, much has been said and predicted about the “unplugging”, sunset, or demise of mainframe by many client-server experts in the 1990’s. Industry expert Stewart Alsop predicted in 1991 that “… the last mainframe will be unplugged on March 15, 1996”, relegating the (what they say as) Jurassic platform (referring to the era 65 million years ago when dinosaurs roamed the planet) into oblivion.

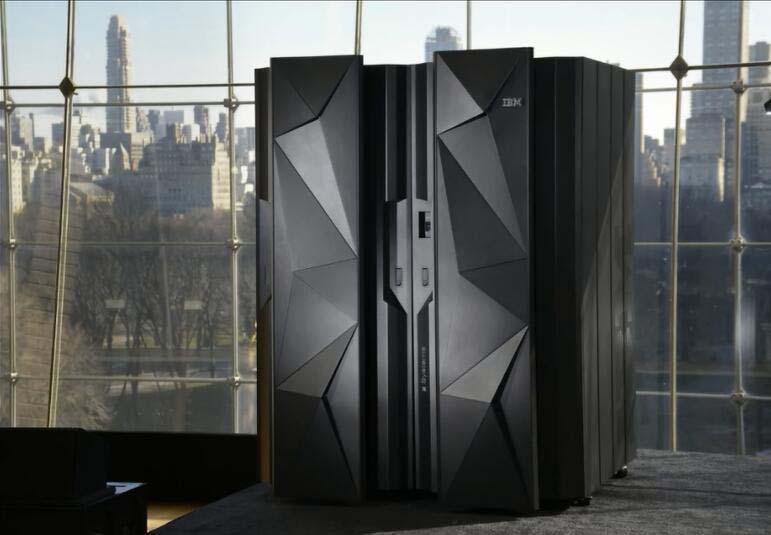

Ironically, in 2004, IBM celebrated the 40th birthday of mainframe, launching in the following year the result of over USD1 billion in R&D investments – the z9, its latest generation of the zSeries platform.In 2009, the Linux on System z was introduced, enabling a truly open operating environment, while after the 50th anniversary celebration in 2014, it’s the launch of z13 enterprise server – IBM’s answer to the ever-growing area of mobile, data analytics and cloud computing.

Factors Why Mainframe/COBOL Stays

The reason for the continued stay?

Mainframe has kept its traditional advantages over the non-mainframe platforms – reliability, availability, scalability, and security. At present, Micro Focus estimates that in big businesses, COBOL still accounts for 70-75% of all computer transactions and business data processing.

COBOL vs. Others

But there’s C++, Java, or J2EE, why not use them?

Well, COBOL can do many things that other programming languages cannot do easily, or cannot do at all. Include accessing indexed-sequential files, producing formatted numeric output, running in a pseudo-conversational interactive environment, accessing hierarchical and network databases, and processing very huge files. Add to this the fact that other languages have no concept of “records”, which is very vital for business applications. This is what separating COBOL from the rest. Note that C++, Java, Visual Basic, etc. were developed not to compete or replace COBOL.

It’s just that processing large files, producing formatted reports, or providing an online interface for hundreds of concurrent in-house users for a business application is the turf of COBOL. (Hey, that’s what “B” in COBOL stands for.)

Migration Concerns

It has to be admitted, however, that several companies, like Micro Focus and Sun, are now offering migration technology that makes it possible to emulate the mainframe environment, taking the applications off the mainframe but performing the migration without having to do a complete rewrite, thus avoiding enormous manpower cost. (Just imagine the estimated 210 billion lines of COBOL in production today, and still growing!)

Add to the emergence of the abovementioned alternative the following concerns about the viability of mainframe:

1. Cost of mainframe hardware

2. Declining availability of COBOL skills

3. Integration price

It should be observed that for sub-1,000 MIPS (million instructions per second) mainframe platform, the traditional advantages of mainframe (reliability, availability, scalability, and security) over the non-mainframe platform are eroding very quickly. In such cases, the very high cost of mainframe hardware is difficult to justify compared to the 10-times less expensive Intel/AMD. With this, it is perceived that the sub-500 MIPS mainframes will be a thing of the past in the next five years. On the other hand, the same cannot be said for very large mainframes (4,000+ MIPS).

CIO’s of companies using these systems still think that benefits outweigh the cost in their current situation although strategies that would balance IT expenses, risk, and innovation are always being addressed.

Skills Crisis

With the ‘skills crisis’ is the claim that COBOL will come to a halt due to the decreasing skills base. It is believed, however, that there will be no acute shortage in the short term, as the fall in the number of COBOL developers will be offset by a comparable reduction in the amount of COBOL development that will take place.

Nevertheless, employers need to address the issue in the long run since starting last year up to 2020, the older generation of COBOL developers will start leaving the workforce.

Future for Mainframe

Although there is still a consensus that integrating mainframe-based applications with distributed applications is too costly and complex, IBM is introducing new and better technologies that would enable independent software vendors (ISVs) to easily port their classic or Java-based applications to the mainframe.

Taking all of these into consideration, it is still firmly believed that even though companies with sub-500 (even most of the sub-1,000) MIPS have geared up to migrate to non-mainframe platforms, those with larger mainframes will still grow slowly in the coming years. Besides, the recent release of z13 only attests IBM’s commitment to supporting its existing clients, hence, seeing a long-term future for the mainframe platform.

CLPS and Mainframe Training

This future is very well in the mind of CLPS, a global IT service and solutions company founded in 2005with the initial goal of supplying qualified IT resources to financial institutions that would like to tap the potentials of new University graduates in China.

As there was no definitive mainframe training program in the University that time, CLPS created a platform that used actual mainframe programming environment to facilitate learning. Trainees were able to login using actual TSO IDs and compiled their programs as if they were doing it in a development environment of a bank’s legacy system. It started with just 12 new graduates back then. Over the past 10 years, the company has already delivered 5,000 technology talents to global banks and financial institutions partnering with universities and colleges through its Internship Program for the continuous “supply” of talents to be trained and deployed.

CLPS Virtual Bank Training Platform(CLB)

But CLPS didn’t stop there.

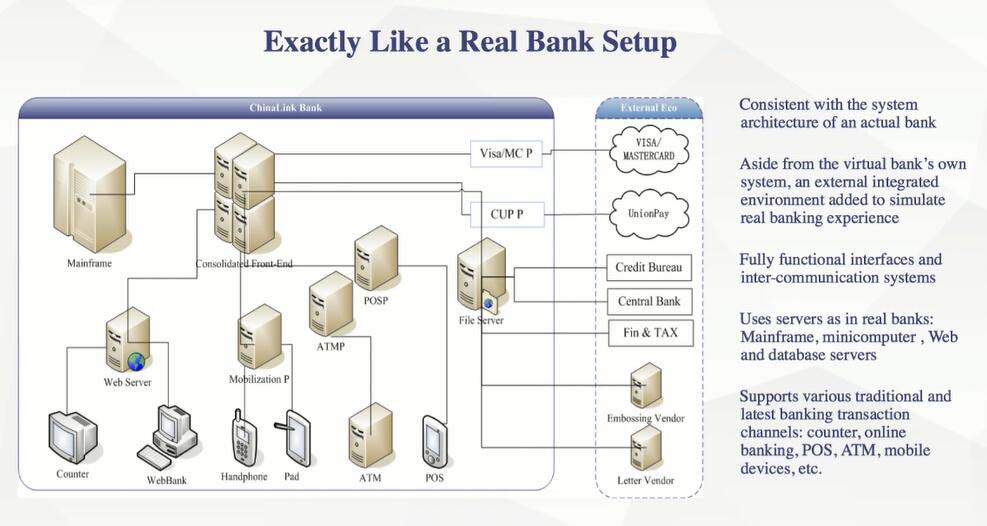

Understanding the need to equip the students with not just the programming basics but with applicable, practical, and in-demand skills that banks and financial institutions are looking for, CLPS independently designed a dedicated platform which consists of modular, real-world banking systems – the CLPS Virtual Bank – thereby creating a technology environment for training financial IT professionals.

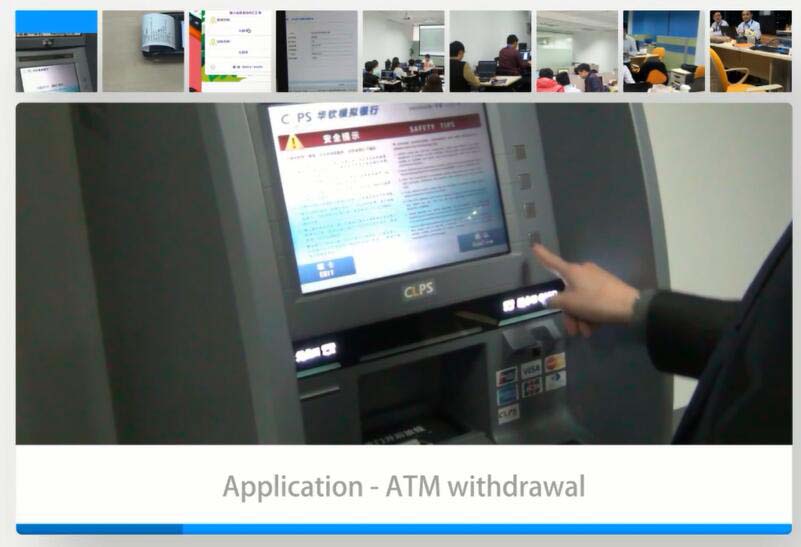

The CLPS Virtual Bank Training Platform (CLB) is not a theoretical course; it is a real-world system. Our ATM is plugged in to it. It combines mainframe, online, mobile, back-, middle- and front-office technologies, enabling a complete training needs development for banking IT talent in technology, project and business analysis, and delivering individuals and teams that have real experience at analyzing business requirements and implementing technology solutions.

A complete banking technology training platform, indeed, that would also address the skills gaps that banks and financial organizations currently face.

More importantly, the Virtual Bank could be deployed in client’s data centers or within CLPS’s own data centers. This means you could build the whole system with full control of license model or have the safety to experiment with the system with no risk to your own equipment or network, and pay only per-user usage fees of SaaS model.

Conclusion

So, there’s nothing to fret about the lack of direction for mainframe.

The need is still there, the demand continues to exist, the ideal technology training platform is available, and the raw talents are out there waiting to be honed and put to use in this ever-challenging world of financial technology.

Follow Us